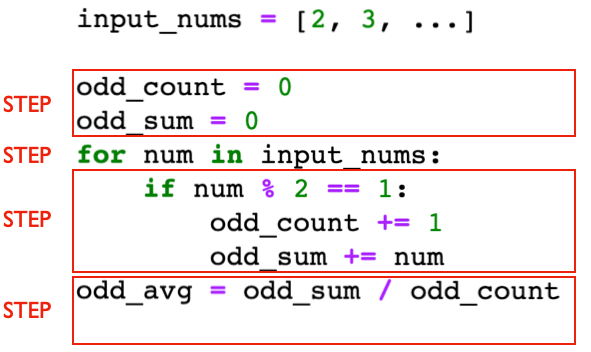

Even though function B and C are "bigger" than function A, Big O will categorize them as being in the same set of functions, as they are all the same shape (straight lines). Big-O notation does not care about the following:

- if you add a fixed amount to a function (for example, line B is just line A shifted up)

- if you multiple a function by a constant (for example, line C is just line A multiplied by 3)

To formalize this a bit, Big-O analysis says if we want to claim function $y=f(x)$ belongs to the same set as as $y=g(x)$, we can multiply the g function by some constant to create a new function, then show that $y=f(x)$ is beneath the new function. For example, line D is line A multiplied by a constant (in this case 5, though we could have chosen any constant we liked). E is above line C, therefore C is in the same complexity set as A. We're also allowed to only look at large N values (above a threshold of our choosing), so B is also in the same complexity set as A because the B line is beneath the E line for all N values of 4 or greater.

In contrast, E is not beneath line D. Of course, we could have used a different constant multiple on the A line to produce the D line, such as $D(N) = 999999*N$. However, it doesn't matter what constant we use. We'll still end up with a straight line, and no matter how steep it is, the E line will eventually surpass it because E's line keeps getting steeper and steeper. E is NOT in A's complexity class -- it's y values fundamentally grow faster as N increases.

Let's write a more precise mathematical definition to capture this intuition:

Definition: If $f(N) \le C * g(N)$ for large N values and some fixed constant C, then $f(N) \in O(g(N))$.

How does categorizing functions into complexity classes/set relate to algorithm performance? Let's look at the previous plot again, with some new labels: